I vaguely remember someone making a comment in a discussion about sRGB, that ran along the lines of

So then, is sRGB like µ‑law encoding?

This comment was not about the color space itself but about the specific pixel formats nowadays branded as ’sRGB’. In this case, the answer should be yes. And while the technical details are not exactly the same, that analogy with the µ‑law very much nails it.

When you think of sRGB pixel formats as nothing but a special encoding, it becomes clear that using such a format does not make you automatically “very picky of color reproduction”. This assumption was used by hardware vendors to rationalize the decision to limit the support of sRGB pixel formats to 8‑bit precision, because people “would never want” to have sRGB support for anything less. Not true! I’m going to make a case for this later. But first things first.

I’m going to make a case for this later. But first things first.

Μ‑law encoding

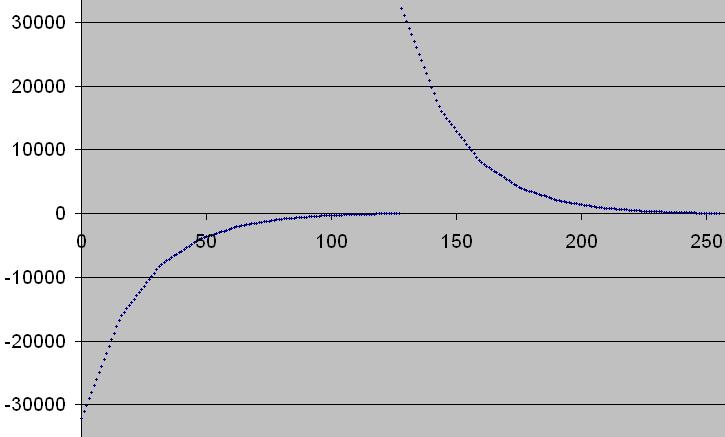

In the audio world, µ‑law encoding is a way to encode individual sample values with 8 bits while the dynamic range of the represented value is around 12 bits. It is the default encoding used in the au file format. The following image shows the encoded sample value for each code point from 0 to 255.

It is worth noting that the technique was originally invented in the analog world to reduce transmission noise. A logarithmic amplifier would non-linearly compress the signal before transmission. Any noise introduced during transmission would be then be attenuated at the receiving station when the signal is expanded to its original form. The gist of this method is to distribute the noise in a perceptually uniform way. Without the compression and expansion, the noise would seem to disproportionally affect the low levels. A similar effect of noise reduction is achieved in the digital domain when using a non-linear encoding like the µ‑law, but this time it is the apparent quantization noise that is reduced.

Gamma and sRGB

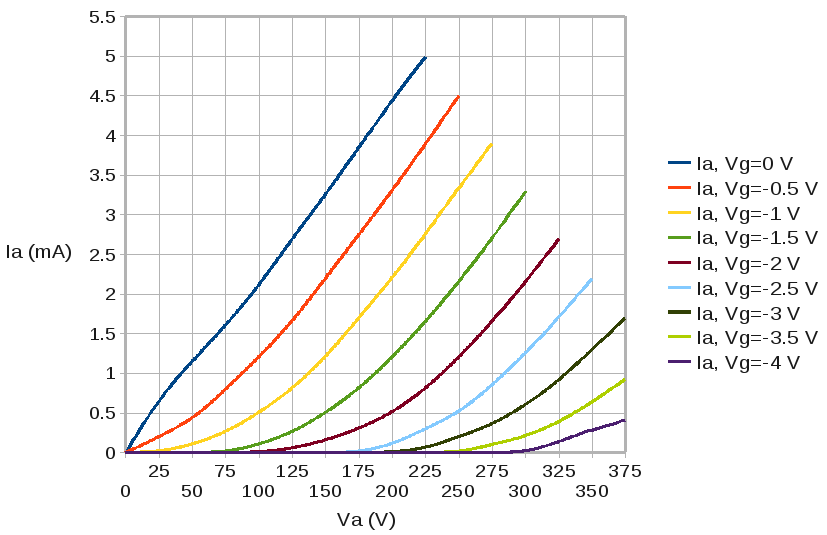

Back in the visual world we have the concept of display gamma, which was developed for television in the 1930’s for entirely the same reasons [1]. The broadcaster would gamma-compress the signal before it is aired and the receiver gamma-expands its back. The triode characteristic of the electron gun was used to implement an approximate 2 to 2.5 power law that follows the curve of human perception (as you can see in the figure below, the implementation of this power law in a CRT is very approximate!). A ‘brightness’ knob on the television set allows the viewer to fine-tune the effective exponent of this relation to the situation at hand. As with audio over telephone lines, the goal was to distribute the noise in a perceptually uniform way.

Triode characteristic for different grid voltages.

CC-BY-SA from Wikepedia

And then it happened: people started plugging television sets to computers.

But the poor TV has no idea that it is now connected to a computer and faithfully gamma-expands all the RGB values as if they were still being sent from a broadcaster. This implicit gamma-expansion has been a reality for decades and has shaped the way how people expect RGB colors to look like. Then in 1995 at the dawn of the web, Microsoft and HP gave a name to this mess and called it “standard RGB”, which is nothing more than an euphemism for “how your average uncalibrated monitor displays your RGB values”.

It should be clear that the advantage of having a display gamma in the digital domain is similar to the advantage of µ‑law encoding for audio: A large dynamic range can be encoded with a lower number of bits.

How the ARB missed an important point

Modern graphics hardware usually has the ability to do gamma-expansion as part of the texture fetch process. This feature is enabled by selecting an appropriate pixel format for the texture, like GL_SRGB8_ALPHA8 resp. DXGI_FORMAT_R8G8B8A8_UNORM_SRGB. This makes sure the pixel shader sees the original color values, not the gamma-compressed ones. But note what the OpenGL sRGB extension has to say about this matter:

6) Should all component sizes be supported for sRGB components or

just 8-bit?

RESOLVED: Just 8-bit. For sRGB values with more than 8 bit of

precision, a linear representation may be easier to work with

and adequately represent dim values. Storing 5-bit and 6-bit

values in sRGB form is unnecessary because applications

sophisticated enough to sRGB to maintain color precision will

demand at least 8-bit precision for sRGB values.

Because hardware tables are required sRGB conversions, it doesn't

make sense to burden hardware with conversions that are unlikely

when 8-bit is the norm for sRGB values.

If this should be a subtle form of flattery, I doesn’t get to me. Yes, I am sophisticated enough for sRGB in my applications, but no, I do not demand at least 8‑bit precision in all cases. Quite to the contrary, while the motivation for the hardware guys is understandable, I would make it mandatory to have sRGB support for 4‑bit and 5‑bit precisions so that dynamic textures can save bandwidth while at the same time benefit from conversion hardware.

There was a brief window in time when 5‑bit sRGB support was available in DirectX 9 hardware like the R300, until about late 2005. Then suddenly and silently, sRGB support for anything but 8‑bit precision was removed in newer hardware, and was also disabled for existing hardware in newer drivers. What the …? Yes exactly, that’s what I thought. At that time we were in the final stages of Spellforce 2 development, and breaking changes like these of course are always welcome.

The story about dynamically baked 5:6:5 sRGB terrain textures

Spellforce 2 is one of the few games from 2006 to implement a linear lighting model via the aforementioned hardware features. The colors from any textures are gamma-expanded prior to entering the lighting equations in the shader, and then gamma-compressed again before they are written out to the frame buffer. This is the standard linear lighting process.

The Spellforce 2 terrain system could dynamically bake and cache distant tiles into low resolution 16-bpp textures (RGB 5:6:5). It was absolutely crucial for performance to have such a cache, but the cache was taking a significant chunk of video memory. As with any textures, the terrain textures had to be gamma-expanded prior to entering the lighting equations. In DirectX 9, this conversion was enabled via a “toggle switch” (D3DSAMP_SRGBTEXTURE) as opposed to the more modern concept of being a separate pixel format. You could switch it on for any bit depth (4‑bit, 5‑bit, 8‑bit, didn’t matter). That is, it was possible until the hardware vendors changed their mind.

This change of course forced me to either a) do arithmetic gamma-expansion in the shader or b) use 8‑bit precision for the terrain tile cache. Since b) was not an option, I implemented a) via a cheap squaring (multiplying the color with itself). This trick effectively implements a gamma 2.0 expansion curve, which is different from the gamma 2.2 expansion curve that is built into the hardware tables. Therefore, the colors of distant terrain tiles never exactly match their close up counterpart. You can say we were lucky that we didn’t have to make such a change after Spellforce 2 was released!

Conclusion and Recommendation

The conclusion of this article is, yes, sRGB texture formats are like µ‑law encoding. They are about encoding and nothing but encoding. They are not about gamma correction, because using sRGB textures doesn’t “correct” anything except to undo one very specific gamma-compression curve. Using the term “correction” implies being nit-picky about that last bit of color precision, which isn’t the matter here. The matter is a difference in the encoding. And under no circumstances are users “sophisticated enough for sRGB in their apps” so much “picky about color reproduction” that they would “never want” anything less than 8‑bit precision (emphasis mine)!

In the light of mobile GPUs and the never-ending quest to reduce memory traffic, it would be very useful to have automatic sRGB conversion for lower bit depths. Imagine things like dynamic cube maps or—terrain tile caches ;)

EDIT: I wouldn’t care if the conversion from lower bit depths was implemented in terms of first expanding to 8 bits, then using the 8‑bit LUT. Precision wise I would this as one and the same, so there is no need for additional 4‑bit and 5‑bit tables for me. Circuitry for such a process has to be built into hardware anyway if S3TC/DXT texture compression formats are used together with sRGB conversion, and could be reused for uncompressed low bit depths formats.

References

[1] James A. Moyer, 1936, “Radio Receiving and Television Tubes”,

http://www.tubebooks.org/…

Pingback: Correct sRGB Dithering | The Tenth Planet

Pingback: Caveat: Even NASA pictures may not be linear (or the wrong kind of linear) | The Tenth Planet