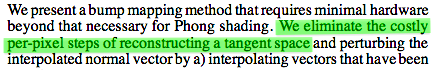

This post is a follow-up to my 2006 ShaderX5 article [4] about normal mapping without a pre-computed tangent basis. In the time since then I have refined this technique with lessons learned in real life. For those unfamiliar with the topic, the motivation was to construct the tangent frame on the fly in the pixel shader, which ironically is the exact opposite of the motivation from [2]:

Since it is not 1997 anymore, doing the tangent space on-the-fly has some potential benefits, such as reduced complexity of asset tools, per-vertex bandwidth and storage, attribute interpolators, transform work for skinned meshes and last but not least, the possibility to apply normal maps to any procedurally generated texture coordinates or non-linear deformations.

Intermission: Tangents vs Cotangents

The way that normal mapping is traditionally defined is, as I think, flawed, and I would like to point this out with a simple C++ metaphor. Suppose we had a class for vectors, for example called Vector3, but we also had a different class for covectors, called Covector3. The latter would be a clone of the ordinary vector class, except that it behaves differently under a transformation (Edit 2018: see this article for a comprehensive introduction to the theory behind covectors and dual spaces). As you may know, normal vectors are an example of such covectors, so we’re going to declare them as such. Now imagine the following function:

Vector3 tangent; Vector3 bitangent; Covector3 normal; Covector3 perturb_normal( float a, float b, float c ) { return a * tangent + b * bitangent + c * normal; // ^^^^ compile-error: type mismatch for operator + } |

The above function mixes vectors and covectors in a single expression, which in this fictional example leads to a type mismatch error. If the normal is of type Covector3, then the tangent and the bitangent should be too, otherwise they cannot form a consistent frame, can they? In real life shader code of course, everything would be defined as float3 and be fine, or rather not.

Mathematical Compile Error

Unfortunately, the above mismatch is exactly how the ‘tangent frame’ for the purpose of normal mapping was introduced by the authors of [2]. This type mismatch is invisible as long as the tangent frame is orthogonal. When the exercise is however to reconstruct the tangent frame in the pixel shader, as this article is about, then we have to deal with a non-orthogonal screen projection. This is the reason why in the book I had introduced both ![]() (which should be called co-tangent) and

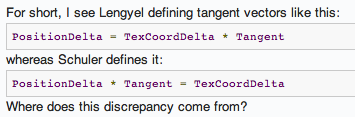

(which should be called co-tangent) and ![]() (now it gets somewhat silly, it should be called co-bi-tangent) as covectors, otherwise the algorithm does not work. I have to admit that I could have been more articulate about this detail. This has caused real confusion, cf from gamedev.net:

(now it gets somewhat silly, it should be called co-bi-tangent) as covectors, otherwise the algorithm does not work. I have to admit that I could have been more articulate about this detail. This has caused real confusion, cf from gamedev.net:

The discrepancy is explained above, as my ‘tangent vectors’ are really covectors. The definition on page 132 is consistent with that of a covector, and so the frame ![]() should be called a cotangent frame.

should be called a cotangent frame.

Intermission 2: Blinns Perturbed Normals (History Channel)

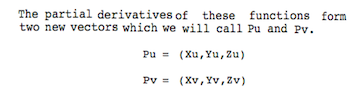

In this section I would like to show how the definition of ![]() and

and ![]() as covectors follows naturally from Blinn’s original bump mapping paper [1]. Blinn considers a curved parametric surface, for instance, a Bezier-patch, on which he defines tangent vectors

as covectors follows naturally from Blinn’s original bump mapping paper [1]. Blinn considers a curved parametric surface, for instance, a Bezier-patch, on which he defines tangent vectors ![]() and

and ![]() as the derivatives of the position

as the derivatives of the position ![]() with respect to

with respect to ![]() and

and ![]() .

.

In this context it is a convention to use subscripts as a shorthand for partial derivatives, so he is really saying ![]() , etc. He also introduces the surface normal

, etc. He also introduces the surface normal ![]() and a bump height function

and a bump height function ![]() , which is used to displace the surface. In the end, he arrives at a formula for a first order approximation of the perturbed normal:

, which is used to displace the surface. In the end, he arrives at a formula for a first order approximation of the perturbed normal:

![]()

I would like to draw your attention towards the terms ![]() and

and ![]() . They are the perpendiculars to

. They are the perpendiculars to ![]() and

and ![]() in the tangent plane, and together form a vector basis for the displacements

in the tangent plane, and together form a vector basis for the displacements ![]() and

and ![]() . They are also covectors (this is easy to verify as they behave like covectors under transformation) so adding them to the normal does not raise said type mismatch. If we divide these terms one more time by

. They are also covectors (this is easy to verify as they behave like covectors under transformation) so adding them to the normal does not raise said type mismatch. If we divide these terms one more time by ![]() and flip their signs, we’ll arrive at the ShaderX5 definition of

and flip their signs, we’ll arrive at the ShaderX5 definition of ![]() and

and ![]() as follows:

as follows:

![]()

![]()

where the hat (as in ![]() ) denotes the normalized normal.

) denotes the normalized normal. ![]() can be interpreted as the normal to the plane of constant

can be interpreted as the normal to the plane of constant ![]() , and likewise

, and likewise ![]() as the normal to the plane of constant

as the normal to the plane of constant ![]() . Therefore we have three normal vectors, or covectors,

. Therefore we have three normal vectors, or covectors, ![]() ,

, ![]() and

and ![]() , and they are the a basis of a cotangent frame. Equivalently,

, and they are the a basis of a cotangent frame. Equivalently, ![]() and

and ![]() are the gradients of

are the gradients of ![]() and

and ![]() , which is the definition I had used in the book. The magnitude of the gradient therefore determines the bump strength, a fact that I will discuss later when it comes to scale invariance.

, which is the definition I had used in the book. The magnitude of the gradient therefore determines the bump strength, a fact that I will discuss later when it comes to scale invariance.

A Little Unlearning

The mistake of many authors is to unwittingly take ![]() and

and ![]() for

for ![]() and

and ![]() , which only works as long as the vectors are orthogonal. Let’s unlearn ‘tangent’, relearn ‘cotangent’, and repeat the historical development from this perspective: Peercy et al. [2] precomputes the values

, which only works as long as the vectors are orthogonal. Let’s unlearn ‘tangent’, relearn ‘cotangent’, and repeat the historical development from this perspective: Peercy et al. [2] precomputes the values ![]() and

and ![]() (the change of bump height per change of texture coordinate) and stores them in a texture. They call it ’normal map’, but is a really something like a ’slope map’, and they have been reinvented recently under the name of derivative maps. Such a slope map cannot represent horizontal normals, as this would need an infinite slope to do so. It also needs some ‘bump scale factor’ stored somewhere as meta data. Kilgard [3] introduces the modern concept of a normal map as an encoded rotation operator, which does away with the approximation altogether, and instead goes to define the perturbed normal directly as

(the change of bump height per change of texture coordinate) and stores them in a texture. They call it ’normal map’, but is a really something like a ’slope map’, and they have been reinvented recently under the name of derivative maps. Such a slope map cannot represent horizontal normals, as this would need an infinite slope to do so. It also needs some ‘bump scale factor’ stored somewhere as meta data. Kilgard [3] introduces the modern concept of a normal map as an encoded rotation operator, which does away with the approximation altogether, and instead goes to define the perturbed normal directly as

![]()

where the coefficients ![]() ,

, ![]() and

and ![]() are read from a texture. Most people would think that a normal map stores normals, but this is only superficially true. The idea of Kilgard was, since the unperturbed normal has coordinates

are read from a texture. Most people would think that a normal map stores normals, but this is only superficially true. The idea of Kilgard was, since the unperturbed normal has coordinates ![]() , it is sufficient to store the last column of the rotation matrix that would rotate the unperturbed normal to its perturbed position. So yes, a normal map stores basis vectors that correspond to perturbed normals, but it really is an encoded rotation operator. The difficulty starts to show up when normal maps are blended, since this is then an interpolation of rotation operators, with all the complexity that goes with it (for an excellent review, see the article about Reoriented Normal Mapping [5] here).

, it is sufficient to store the last column of the rotation matrix that would rotate the unperturbed normal to its perturbed position. So yes, a normal map stores basis vectors that correspond to perturbed normals, but it really is an encoded rotation operator. The difficulty starts to show up when normal maps are blended, since this is then an interpolation of rotation operators, with all the complexity that goes with it (for an excellent review, see the article about Reoriented Normal Mapping [5] here).

Solution of the Cotangent Frame

The problem to be solved for our purpose is the opposite as that of Blinn, the perturbed normal is known (from the normal map), but the cotangent frame is unknown. I’ll give a short revision of how I originally solved it. Define the unknown cotangents ![]() and

and ![]() as the gradients of the texture coordinates

as the gradients of the texture coordinates ![]() and

and ![]() as functions of position

as functions of position ![]() , such that

, such that

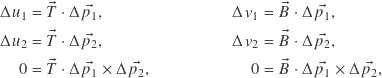

![]()

where ![]() is the dot product. The gradients are constant over the surface of an interpolated triangle, so introduce the edge differences

is the dot product. The gradients are constant over the surface of an interpolated triangle, so introduce the edge differences ![]() ,

, ![]() and

and ![]() . The unknown cotangents have to satisfy the constraints

. The unknown cotangents have to satisfy the constraints

where ![]() is the cross product. The first two rows follow from the definition, and the last row ensures that

is the cross product. The first two rows follow from the definition, and the last row ensures that ![]() and

and ![]() have no component in the direction of the normal. The last row is needed otherwise the problem is underdetermined. It is straightforward then to express the solution in matrix form. For

have no component in the direction of the normal. The last row is needed otherwise the problem is underdetermined. It is straightforward then to express the solution in matrix form. For ![]() ,

,

![Rendered by QuickLaTeX.com \[\vec{T} = \begin{pmatrix} \Delta \vec{p_1} \\ \Delta \vec{p_2} \\ \Delta \vec{p_1} \times \Delta \vec{p_2} \end{pmatrix}^{-1} \begin{pmatrix} \Delta u_1 \\ \Delta u_2 \\ 0 \end{pmatrix} ,\]](http://www.thetenthplanet.de/wordpress/wp-content/ql-cache/quicklatex.com-cb56923291b0d70fec82d997de045aa6_l3.png)

and analogously for ![]() with

with ![]() .

.

Into the Shader Code

The above result looks daunting, as it calls for a matrix inverse in every pixel in order to compute the cotangent frame! However, many symmetries can be exploited to make that almost disappear. Below is an example of a function written in GLSL to calculate the inverse of a 3×3 matrix. A similar function written in HLSL appeared in the book, and then I tried to optimize the hell out of it. Forget this approach as we are not going to need it at all. Just observe how the adjugate and the determinant can be made from cross products:

mat3 inverse3x3( mat3 M ) { // The original was written in HLSL, but this is GLSL, // therefore // - the array index selects columns, so M_t[0] is the // first column of M_t, etc. // - the mat3 constructor assembles columns, so // cross( M_t[1], M_t[2] ) becomes the first column // of the adjugate, etc. // - for the determinant, it does not matter whether it is // computed with M or with M_t; but using M_t makes it // easier to follow the derivation in the text mat3 M_t = transpose( M ); float det = dot( cross( M_t[0], M_t[1] ), M_t[2] ); mat3 adjugate = mat3( cross( M_t[1], M_t[2] ), cross( M_t[2], M_t[0] ), cross( M_t[0], M_t[1] ) ); return adjugate / det; } |

We can substitute the rows of the matrix from above into the code, then expand and simplify. This procedure results in a new expression for ![]() . The determinant becomes

. The determinant becomes ![]() , and the adjugate can be written in terms of two new expressions, let’s call them

, and the adjugate can be written in terms of two new expressions, let’s call them ![]() and

and ![]() (with

(with ![]() read as ‘perp’), which becomes

read as ‘perp’), which becomes

![Rendered by QuickLaTeX.com \[\vec{T} = \frac{1}{\left| \Delta \vec{p_1} \times \Delta \vec{p_2} \right|^2} \begin{pmatrix} \Delta \vec{p_2}_\perp \\ \Delta \vec{p_1}_\perp \\ \Delta \vec{p_1} \times \Delta \vec{p_2} \end{pmatrix}^\mathrm{T} \begin{pmatrix} \Delta u_1 \\ \Delta u_2 \\ 0 \end{pmatrix} ,\]](http://www.thetenthplanet.de/wordpress/wp-content/ql-cache/quicklatex.com-14ea900ab796207726424b91a6262eeb_l3.png)

![]()

As you might guessed it, ![]() and

and ![]() are the perpendiculars to the triangle edges in the triangle plane. Say Hello! They are, again, covectors and form a proper basis for cotangent space. To simplify things further, observe:

are the perpendiculars to the triangle edges in the triangle plane. Say Hello! They are, again, covectors and form a proper basis for cotangent space. To simplify things further, observe:

- The last row of the matrix is irrelevant since it is multiplied with zero.

- The other matrix rows contain the perpendiculars (

and

and  ), which after transposition just multiply with the texture edge differences.

), which after transposition just multiply with the texture edge differences. - The perpendiculars can use the interpolated vertex normal

instead of the face normal

instead of the face normal  , which is simpler and looks even nicer.

, which is simpler and looks even nicer. - The determinant (the expression

) can be handled in a special way, which is explained below in the section about scale invariance.

) can be handled in a special way, which is explained below in the section about scale invariance.

Taken together, the optimized code is shown below, which is even simpler than the one I had originally published, and yet higher quality:

mat3 cotangent_frame( vec3 N, vec3 p, vec2 uv ) { // get edge vectors of the pixel triangle vec3 dp1 = dFdx( p ); vec3 dp2 = dFdy( p ); vec2 duv1 = dFdx( uv ); vec2 duv2 = dFdy( uv ); // solve the linear system vec3 dp2perp = cross( dp2, N ); vec3 dp1perp = cross( N, dp1 ); vec3 T = dp2perp * duv1.x + dp1perp * duv2.x; vec3 B = dp2perp * duv1.y + dp1perp * duv2.y; // construct a scale-invariant frame float invmax = inversesqrt( max( dot(T,T), dot(B,B) ) ); return mat3( T * invmax, B * invmax, N ); } |

Scale invariance

The determinant ![]() was left over as a scale factor in the above expression. This has the consequence that the resulting cotangents

was left over as a scale factor in the above expression. This has the consequence that the resulting cotangents ![]() and

and ![]() are not scale invariant, but will vary inversely with the scale of the geometry. It is the natural consequence of them being gradients. If the scale of the geomtery increases, and everything else is left unchanged, then the change of texture coordinate per unit change of position gets smaller, which reduces

are not scale invariant, but will vary inversely with the scale of the geometry. It is the natural consequence of them being gradients. If the scale of the geomtery increases, and everything else is left unchanged, then the change of texture coordinate per unit change of position gets smaller, which reduces ![]() and similarly

and similarly ![]() in relation to

in relation to ![]() . The effect of all this is a diminished pertubation of the normal when the scale of the geometry is increased, as if a heightfield was stretched.

. The effect of all this is a diminished pertubation of the normal when the scale of the geometry is increased, as if a heightfield was stretched.

Obviously this behavior, while totally logical and correct, would limit the usefulness of normal maps to be applied on different scale geometry. My solution was and still is to ignore the determinant and just normalize ![]() and

and ![]() to whichever of them is largest, as seen in the code. This solution preserves the relative lengths of

to whichever of them is largest, as seen in the code. This solution preserves the relative lengths of ![]() and

and ![]() , so that a skewed or stretched cotangent space is sill handled correctly, while having an overall scale invariance.

, so that a skewed or stretched cotangent space is sill handled correctly, while having an overall scale invariance.

Non-perspective optimization

As the ultimate optimization, I also considered what happens when we can assume ![]() and

and ![]() . This means we have a right triangle and the perpendiculars fall on the triangle edges. In the pixel shader, this condition is true whenever the screen-projection of the surface is without perspective distortion. There is a nice figure demonstrating this fact in [4]. This optimization saves another two cross products, but in my opinion, the quality suffers heavily should there actually be a perspective distortion.

. This means we have a right triangle and the perpendiculars fall on the triangle edges. In the pixel shader, this condition is true whenever the screen-projection of the surface is without perspective distortion. There is a nice figure demonstrating this fact in [4]. This optimization saves another two cross products, but in my opinion, the quality suffers heavily should there actually be a perspective distortion.

Putting it together

To make the post complete, I’ll show how the cotangent frame is actually used to perturb the interpolated vertex normal. The function perturb_normal does just that, using the backwards view vector for the vertex position (this is ok because only differences matter, and the eye position goes away in the difference as it is constant).

vec3 perturb_normal( vec3 N, vec3 V, vec2 texcoord ) { // assume N, the interpolated vertex normal and // V, the view vector (vertex to eye) vec3 map = texture2D( mapBump, texcoord ).xyz; #ifdef WITH_NORMALMAP_UNSIGNED map = map * 255./127. - 128./127.; #endif #ifdef WITH_NORMALMAP_2CHANNEL map.z = sqrt( 1. - dot( map.xy, map.xy ) ); #endif #ifdef WITH_NORMALMAP_GREEN_UP map.y = -map.y; #endif mat3 TBN = cotangent_frame( N, -V, texcoord ); return normalize( TBN * map ); } |

varying vec3 g_vertexnormal; varying vec3 g_viewvector; // camera pos - vertex pos varying vec2 g_texcoord; void main() { vec3 N = normalize( g_vertexnormal ); #ifdef WITH_NORMALMAP N = perturb_normal( N, g_viewvector, g_texcoord ); #endif // ... } |

The green axis

Both OpenGL and DirectX place the texture coordinate origin at the start of the image pixel data. The texture coordinate (0,0) is in the corner of the pixel where the image data pointer points to. Contrast this to most 3‑D modeling packages that place the texture coordinate origin at the lower left corner in the uv-unwrap view. Unless the image format is bottom-up, this means the texture coordinate origin is in the corner of the first pixel of the last image row. Quite a difference!

An image search on Google reveals that there is no dominant convention for the green channel in normal maps. Some have green pointing up and some have green pointing down. My artists prefer green pointing up for two reasons: It’s the format that 3ds Max expects for rendering, and it supposedly looks more natural with the ‘green illumination from above’, so this helps with eyeballing normal maps.

Sign Expansion

The sign expansion deserves a little elaboration because I try to use signed texture formats whenever possible. With the unsigned format, the value ½ cannot be represented exactly (it’s between 127 and 128). The signed format does not have this problem, but in exchange, has an ambiguous encoding for −1 (can be either −127 or −128). If the hardware is incapable of signed texture formats, I want to be able to pass it as an unsigned format and emulate the exact sign expansion in the shader. This is the origin of the seemingly odd values in the sign expansion.

In Hindsight

The original article in ShaderX5 was written as a proof-of-concept. Although the algorithm was tested and worked, it was a little expensive for that time. Fast forward to today and the picture has changed. I am now employing this algorithm in real-life projects for great benefit. I no longer bother with tangents as vertex attributes and all the associated complexity. For example, I don’t care whether the COLLADA exporter of Max or Maya (yes I’m relying on COLLADA these days) output usable tangents for skinned meshes, nor do I bother to import them, because I don’t need them! For the artists, it doesn’t occur to them that an aspect of the asset pipeline is missing, because It’s all natural: There is a geometry, there are texture coordinates and there is a normal map, and just works.

Take Away

There are no ‘tangent frames’ when it comes to normal mapping. A tangent frame which includes the normal is logically ill-formed. All there is are cotangent frames in disguise when the frame is orthogonal. When the frame is not orthogonal, then tangent frames will stop working. Use cotangent frames instead.

References

[1] James Blinn, “Simulation of wrinkled surfaces”, SIGGRAPH 1978

http://research.microsoft.com/pubs/73939/p286-blinn.pdf

[2] Mark Peercy, John Airey, Brian Cabral, “Efficient Bump Mapping Hardware”, SIGGRAPH 1997

http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.42.4736

[3] Mark J Kilgard, “A Practical and Robust Bump-mapping Technique for Today’s GPUs”, GDC 2000

http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.18.537

[4] Christian Schüler, “Normal Mapping without Precomputed Tangents”, ShaderX 5, Chapter 2.6, pp. 131 – 140

[5] Colin Barré-Brisebois and Stephen Hill, “Blending in Detail”,

http://blog.selfshadow.com/publications/blending-in-detail/

You do this in a terribly roundabout way, it’s much easier to do

”’

vec3 denormTangent = dFdx(texCoord.y)*dFdy(vPos)-dFdx(vPos)*dFdy(texCoord.y);

vec3 tangent = normalize(denormTangent-smoothNormal*dot(smoothNormal,denormTangent));

vec3 normal = normalize(smoothNormal);

vec3 bitangent = cross(normal,tangent);

”’

Hi devsh,

that would be equivalent to what the article describes as the “non-perspective-optimization”. In this case you’re no longer making a distinction between tangents and co-tangents. It only works if the tangent frame is orthogonal. In case of per-pixel differences taken with dFdx etc, it would only be correct if the mesh is displayed without perspective distortion, hence the name.

Pingback: Normal and normal mapping – One Line to rule them all

Hello!

First I want to thank for the algorithm, this is a very necessary thing. He works on my HLSL!

But there is a bug. When approaching the camera close to the object, a strong noise of pixel texture begins. This is a known problem; no one has yet solved it. Do you have any decisions on this, have you thought about this?

Hi Zagolski

This behaviour is likely that the differences between pixels of the texture coordinate become too small for floating point precision and are then rounded to zero, which leads to a divide by zero down the line.

Try to eliminate any „half“ precision variable that may affect the texture coordinate or view vector, if there are any.

Pingback: Skin Rendering with Texture Space Diffusion – Polysoup Kitchen

Wonderful!!!

Does this approach work with mirrored uv’s? We’re seeing some lighting issues where the lighting is incorrect on the mirrored parts of shapes. Maya knows to flip the normals, but doesn’t know to flip the uv directions. So then the TBN frame looks incorrect.

Yes, it should work with mirrored texture coordinates out of the box. Then the frame spanned by T and B simply changes handedness.

Nice work. It was really simple to integrate in my code, the results look very good, and the explanations are just great!

I observed, however, that the first two column vectors of TBN — those that replace the classical interpolated pre-vertex tangents and bitangents — are smooth over a triangle, however, they are not smooth across triangle edges. Note that the tangents and bitangents are smooth across triangle edges.

Any thoughts on whether this is a problem? To be clear: The final lighting doesn’t seem to look wrong and the normal used for lighting do not appear to have those discontinuities, but I am still curious on your expert’s opinion on that.

Thanks

Quirin

Hallo Quirin, , etc) are constant for all pixels of a given triangle. If you browse through the earlier comment history then you’ll find many more discussions about this fact. In my opinion this has never been a problem in practice. As you notice, the interpolated normal is used for N, so that is smooth.

, etc) are constant for all pixels of a given triangle. If you browse through the earlier comment history then you’ll find many more discussions about this fact. In my opinion this has never been a problem in practice. As you notice, the interpolated normal is used for N, so that is smooth.

as you have noticed, the pseudovectors T and B are faceted. This stems from the fact that all deltas (

Hi, as a bit of a 3D generalist, and relatively new to shaders at that, I can appreciate both the thorough math analysis and the sheer practicality of in-shader computation from nothing else than normals and UVs. It is a blessing to have found this at an early point of my journey, while putting together my first scene viewer app. Thank you for sharing your insight the way you did.

That said, I am not writing just for the sake of praise. I do have a couple of questions, of the “did I get this right?” kind, which may prove useful to other people who try this code in a “toy” project.

1) In the GLSL main() example, g_vertexnormal and g_viewvector are in world space, right? (Because there wouldn’t be a “camera pos” in view or frustum space.) Supposing the program passes raw vertices and normals (in model space) to the shader, along with a bunch of matrices, g_vertexnormal would need to be transformed as a covector, right? (as in, N = normalize(transpose(inverse(mat3(M))) * g_vertexnormal ), M being the 4x4 model matrix such that vertex_pos_world = mat3(M * vec4(vertex_pos_model,1))

And of course the adjoint model matrix would only be different from the plain model matrix if non-uniform scaling is involved (right?).

2) Despite N being smooth across triangle edges (in theory at least), I am seeing some subtle lighting discontinuities, especially when I add specular to the mix. My understanding is that the code is really fine, and I didn’t mess up implementing it either, it’s just OpenGL’s default texture filtering. If the code is implemented from scratch (e.g., in a “toy project” such as mine), then discontinuities at edges are to be expected at some viewing angles (despite the algorithm’s accuracy), and will only disappear once I set up proper mipmapping and anisotropic filtering. Right?

Thanks again,

Sergey

Hallo Sergey,

thanks for your comments!

For 1)

Both vectors g_vertexnormal and g_viewvector must be in the same space, and then the calculated normal is in that space too.

— Reason not to do it in model space: possible non-uniform scale, don’t!

— Reason not to do it in view space: while possible, need to transform all environmental light data from world into view space -> arkward

So my recommendation is world space orientation, but camera-relative.

For 2)

As was written as response to many other commenters: Your code is probably fine. The artifacts are there because the derivatives of the texture coordinates are constant across a triangle. So the T and B vectors do not change over the surface of a triangle. Therefore, the stronger your normal map, the stronger the faceted artifacts, because T and B are only relevant for modifying N according to the normal map, and the direction in which this modification is done may change abrubtly across triangle borders. Mipmapping will reduce the normal map intensity by averaging everything out.

Hi Christian,

Checked on the page yesterday. Glad to see my post registered after all, and thanks for the answer!

1) Thanks for the confirmation. I have tried a bunch of different coordinates while debugging, and indeed camera-relative world coordinates make perfect sense for your algorithm. (My first shader attempt was in view space because of some lighting code examples that were written that way, but as you say work in view space would become less and less intuitive for complex lighting.

2) In your previous reply to Quirin (about a year ago), you made it sound like your algorithm never leads to faceted looks in practice, and that a smooth interpolated normal N is somehow sufficient to ensure a smooth perturbed normal PN, even when T ad B are discontinuous across an edge. But now you make a valid point about how a discontinuous T or B will be perturbing the normal by different amounts and in different directions (for the same normal map pixel) on both sides of an edge. So now I am really curious which it is! In the general case, the latter is probably true, i.e., there will always be some meshes that have artifacts because of discontinuous T and B (sad!). But clearly for a symmetric enough edge the discontinuous contributions can cancel out, so that the perturbed normal is still smooth and facet-less. What, then, is the condition for such a nice compensation to occur, and couldn’t the algorithm be adjusted so that it happens more often?

It turns out that, in my case, there actually *was* a bug: my specular code involves a normalized eye vector, so I ended up passing that to perturb_normal instead of a non-normalized one. While debugging, I created several test shapes with well-behaved UVs (antiprisms, tesselated cubes, etc), and it was a relief to see the facets disappear once I fixed that normalization mistake. (I will try stronger normal maps on those test shapes, and maybe write up some shape-specific math, but for symmetric enough edges/UVs I don’t expect discontinuous T and B to cause facetedness per se.) I also have a lot of meshes (third-party FBX files, mostly) for which facets can be seen in many areas, but part of that is probably due to bad UVs/normals/geometry. I’ll try and pinpoint the simplest possible mesh for which your algorithm has significant “faceting”, and then report back.

Cheers,

Sergey

Hallo Sergey,

sorry for not checking more often. But legit comments will get through eventually.

As for 2) this condition was originally termed by Peercy et al as the “square patch assumption”: The UV mapping should look like a square grid, without shear, and without non-uniform scale. Consequently then, the TBN frame is orthogonal, modulo a scale factor. Then no visible change occurs across triangles. While not exactly realizable in practice, “good” work on part of the artist may get close to that ideal, and is a desireable quality also for other reasons.

Cheers!

Christian

Hi, thanks for sharing.

Is it necessary to use view vector as p in cotangent_frame? Would it be sufficient to use world position?

Hallo Tomáš, , since their derivatives are the same.

, since their derivatives are the same.

the view vector and the world vector only differ by the the eye vector, which is a constant. Therefore, either view vector or world position may be used for

Using the view vector should behave better numerically because the world position may have large magnitudes that cancel badly when the derivative function is applied.